Play all audios:

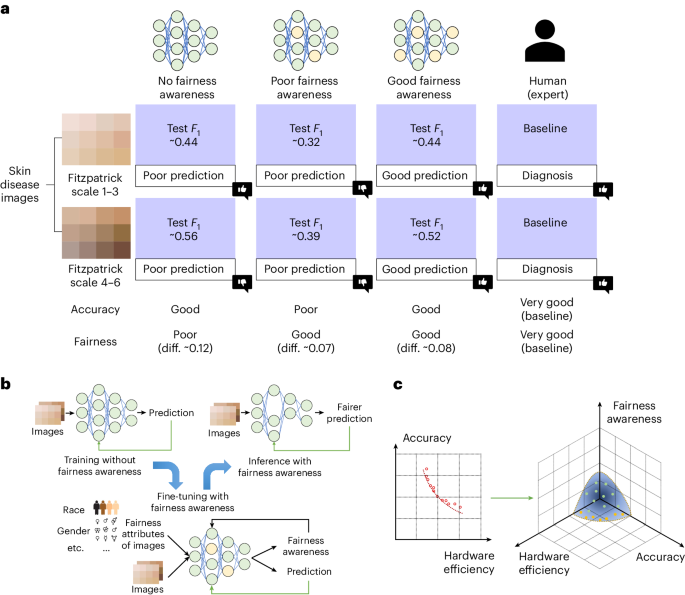

ABSTRACT Ensuring the fairness of neural networks is crucial when applying deep learning techniques to critical applications such as medical diagnosis and vital signal monitoring. However,

maintaining fairness becomes increasingly challenging when deploying these models on platforms with limited hardware resources, as existing fairness-aware neural network designs typically

overlook the impact of resource constraints. Here we analyse the impact of the underlying hardware on the task of pursuing fairness. We use neural network accelerators with compute-in-memory

architecture as examples. We first investigate the relationship between hardware platform and fairness-aware neural network design. We then discuss how hardware advancements in emerging

computing-in-memory devices—in terms of on-chip memory capacity and device variability management—affect neural network fairness. We also identify challenges in designing fairness-aware

neural networks on such resource-constrained hardware and consider potential approaches to overcome them. Access through your institution Buy or subscribe This is a preview of subscription

content, access via your institution ACCESS OPTIONS Access through your institution Access Nature and 54 other Nature Portfolio journals Get Nature+, our best-value online-access

subscription $32.99 / 30 days cancel any time Learn more Subscribe to this journal Receive 12 digital issues and online access to articles $119.00 per year only $9.92 per issue Learn more

Buy this article * Purchase on SpringerLink * Instant access to full article PDF Buy now Prices may be subject to local taxes which are calculated during checkout ADDITIONAL ACCESS OPTIONS:

* Log in * Learn about institutional subscriptions * Read our FAQs * Contact customer support SIMILAR CONTENT BEING VIEWED BY OTHERS HARDWARE-AWARE TRAINING FOR LARGE-SCALE AND DIVERSE DEEP

LEARNING INFERENCE WORKLOADS USING IN-MEMORY COMPUTING-BASED ACCELERATORS Article Open access 30 August 2023 NEURAL ARCHITECTURE SEARCH FOR IN-MEMORY COMPUTING-BASED DEEP LEARNING

ACCELERATORS Article 20 May 2024 HARDWARE DESIGN AND THE COMPETENCY AWARENESS OF A NEURAL NETWORK Article 18 September 2020 DATA AVAILABILITY Two public datasets are used in this study: ISIC

2019, which can be downloaded at https://challenge.isic-archive.com/data/#2019 and Fitzpatrick-17k, which can be downloaded at https://github.com/mattgroh/fitzpatrick17k. The data that

support the findings of this study are available from the corresponding authors upon request. CODE AVAILABILITY The code that support the findings of this study are available from the

corresponding authors upon request. REFERENCES * Zhao, Z.-Q., Zheng, P., Xu, S.-T. & Wu, X. Object detection with deep learning: a review. _IEEE Trans. Neural Netw. Learn. Syst._ 30,

3212–3232 (2019). Article Google Scholar * Buolamwini, J. & Gebru, T. Gender shades: intersectional accuracy disparities in commercial gender classification. In _Proc._ _1st Conference

on Fairness, Accountability and Transparency_ (eds Friedler, S. A. & Wilson, C.) 77–91 (PMLR, 2018). * Kamulegeya, L. et al. Using artificial intelligence on dermatology conditions in

Uganda: a case for diversity in training data sets for machine learning. _Afr. Health Sci._ 23, 753–763 (2023). Article Google Scholar * Ferryman, K. & Pitcan, M. _Fairness in

Precision Medicine_ (Data & Society, 2022). * Gurevich, E., El Hassan, B. & El Morr, C. Equity within AI systems: what can health leaders expect? _Healthc. Manage. Forum_ 36, 119–124

(2023). Article Google Scholar * Ibrahim, S. A., Charlson, M. E. & Neill, D. B. Big data analytics and the struggle for equity in health care: the promise and perils. _Health Equity_

https://doi.org/10.1089/heq.2019.0112 (2020). * Choi, K., Grover, A., Singh, T., Shu, R. & Ermon, S. Fair generative modeling via weak supervision. In _Proc. International Conference on

Machine Learning_ (eds III, Hal Daumé & Singh, A.) 1887–1898 (PMLR, 2020). * Kim, B., Kim, H., Kim, K., Kim, S. & Kim, J. Learning not to learn: training deep neural networks with

biased data. In _Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition_ 9012–9020 (CVF, 2019). * Wang, T., Zhao, J., Yatskar, M., Chang, K.-W. & Ordonez, V. Balanced

datasets are not enough: estimating and mitigating gender bias in deep image representations. In _Proc. IEEE/CVF International Conference on Computer Vision_ 5310–5319 (CVF, 2019). * Elazar,

Y. & Goldberg, Y. Adversarial removal of demographic attributes from text data. In _Proc. 2018 Conference on Empirical Methods in Natural Language Processing_ (eds Elazar, Y. et al.)

11–21 (2018). * Alvi, M., Zisserman, A. & Nellåker, C. Turning a blind eye: explicit removal of biases and variation from deep neural network embeddings. In _Proc. European Conference on

Computer Vision (ECCV) Workshops_ (ed. Leal-Taixe, L.) 556–572 (CVF, 2018). * Zhang, B. H., Lemoine, B. & Mitchell, M. Mitigating unwanted biases with adversarial learning. In _Proc.

2018 AAAI/ACM Conference on AI, Ethics, and Society_ 335–340 (ACM, 2018). * Zhong, Z. _A Tutorial on Fairness in Machine Learning_ (Towards Data Science, 2018). * Sattigeri, P., Hoffman, S.

C., Chenthamarakshan, V. & Varshney, K. R. Fairness GAN: generating datasets with fairness properties using a generative adversarial network. _IBM J. Res. Dev._ 63, 3:1–3:9 (2019). *

Hardt, M., Price, E. & Srebro, N. Equality of opportunity in supervised learning. In _Proc._ _30th International Conference on Neural Information Processing Systems_ (eds Lee, D. et al.)

3323–3331 (Curran Associates, 2016). * Strubell, E., Ganesh, A. & McCallum, A. Energy and policy considerations for deep learning in NLP. In _Proc. 57th Annual Meeting of the

Association for Computational Linguistics_ (eds Korhonen, A. et al.) 3645–3650 (Association for Computational Linguistics, 2019). * Zoph, B. & Le, Q. Neural architecture search with

reinforcement learning. In _Proc. International Conference on Learning Representations_ (ICLR, 2017). * Tan, M. et al. MnasNet: platform-aware neural architecture search for mobile. In

_Proc. IEEE Conference on Computer Vision and Pattern Recognition_ 2815–2823 (CVF, 2019). * Cai, H., Zhu, L. & Han, S. ProxylessNAS: direct neural architecture search on target task and

hardware. In _Proc. International Conference on Learning Representations_ (ICLR, 2019). * Jiang, W., Yang, L., Dasgupta, S., Hu, J. & Shi, Y. Standing on the shoulders of giants:

hardware and neural architecture co-search with hot start. _IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst._ 39, 4154–4165 (2020). Article Google Scholar * Sheng, Y. et al. The

larger the fairer? Small neural networks can achieve fairness for edge devices. In _Proc. 59th ACM/IEEE Design Automation Conference_ 163–168 (ACM, 2022). * LeCun, Y., Denker, J. &

Solla, S. Optimal brain damage. In _Proc. Advances in Neural Information Processing Systems 2_ 598–605 (Morgan-Kaufmann, 1989). * Shafiee, A. et al. ISAAC: a convolutional neural network

accelerator with in-situ analog arithmetic in crossbars. _ACM SIGARCH Comput. Archit. News_ 44, 14–26 (2016). Article Google Scholar * Li, M. et al. iMARS: An in-memory-computing

architecture for recommendation systems. In _Proc. 59th ACM/IEEE Design Automation Conference_ 463–468 (ACM, 2022). * Jiang, W. et al. Device-circuit-architecture co-exploration for

computing-in-memory neural accelerators. _IEEE Trans. Comput._ 70, 595–605 (2020). Article MathSciNet Google Scholar * Yan, Z., Hu, X. S. & Shi, Y. On the reliability of

computing-in-memory accelerators for deep neural networks. In _Proc._ _System Dependability and Analytics: Approaching System Dependability from Data, System and Analytics Perspectives_ (eds

Wang, L. et al.) 167–190 (Springer, 2022). * Shim, W., Seo, J.-S. & Yu, S. Two-step write-verify scheme and impact of the read noise in multilevel RRAM-based inference engine.

_Semicond. Sci. Technol._ 35, 115026 (2020). Article Google Scholar * He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In _Proc. IEEE Conference on

Computer Vision and Pattern Recognition_ 770–778 (CVF, 2016). * Tschandl, P., Rosendahl, C. & Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of

common pigmented skin lesions. _Sci. Data_ 5, 180161 (2018). Article Google Scholar * Codella, N. C. F. et al. Skin lesion analysis toward melanoma detection: a challenge at the 2017

International Symposium on Biomedical Imaging (ISBI), hosted by the International Skin Imaging Collaboration (ISIC). In _Proc. IEEE 15th International Symposium on Biomedical Imaging_

168–172 (IEEE, 2018). * Howard, A. et al. Searching for MobileNetV3. In _Proc. IEEE/CVF International Conference on Computer Vision_ 1314–1324 (CVF, 2019). * Simonyan, K. & Zisserman, A.

Very deep convolutional networks for large-scale image recognition. In _Proc. 3rd International Conference on Learning Representations_ 1–14 (Computational and Biological Learning Society,

2015). * Ma, N., Zhang, X., Zheng, H.-T. & Sun, J. ShuffleNet v2: practical guidelines for efficient CNN architecture design. In _Proc. European Conference on Computer Vision (ECCV)_

(eds Ferrari, V. et al.) 116–131 (CVF, 2018). * Tan, M. & Le, Q. EfficientNet: rethinking model scaling for convolutional neural networks. In _Proc._ _International Conference on Machine

Learning_ (eds Chaudhuri, K. & Salakhutdinov, R.) 6105–6114 (PMLR, 2019). * Dosovitskiy, A. et al. An image is worth 16 × 16 words: transformers for image recognition at scale. In

_Proc. International Conference on Learning Representations_ (ICLR, 2021). * Yan, Z., Hu, X. S. & Shi, Y. Swim: selective write-verify for computing-in-memory neural accelerators. In

_Proc. 59th ACM/IEEE Design Automation Conference (DAC)_ 277–282 (IEEE, 2022). * Yan, Z., Juan, D.-C., Hu, X. S. & Shi, Y. Uncertainty modeling of emerging device based

computing-in-memory neural accelerators with application to neural architecture search. In _Proc. 26th Asia and South Pacific Design Automation Conference (ASP-DAC)_ 859–864 (IEEE, 2021). *

Peng, X., Huang, S., Luo, Y., Sun, X. & Yu, S. DNN+ NeuroSim: an end-to-end benchmarking framework for compute-in-memory accelerators with versatile device technologies. In _Proc. IEEE

International Electron Devices Meeting (IEDM)_ 32–35 (IEEE, 2019). * Groh, M. et al. Evaluating deep neural networks trained on clinical images in dermatology with the Fitzpatrick 17k

dataset. In _Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition_ 1820–1828 (CVF, 2021). * Groh, M., Harris, C., Daneshjou, R., Badri, O. & Koochek, A. Towards

transparency in dermatology image datasets with skin tone annotations by experts, crowds, and an algorithm. _Proc. ACM Hum.–Comput. Interact._ 6, 1–26 (2022). Article Google Scholar * Yao,

P. et al. Fully hardware-implemented memristor convolutional neural network. _Nature_ 577, 641–646 (2020). Article Google Scholar * Liu, Y., Gao, B., Tang, J., Wu, H. & Qian, H.

Architecture-circuit-technology co-optimization for resistive random access memory-based computation-in-memory chips. _Sci. China Inf. Sci._ 66, 200408 (2023). Article Google Scholar *

Wei, W. et al. Switching pathway-dependent strain-effects on the ferroelectric properties and structural deformations in orthorhombic HfO2. _J. Appl. Phys._ 131, 154101 (2022). *

Fitzpatrick, T. B. The validity and practicality of sun-reactive skin types I through VI. _Arch. Dermatol._ 124, 869–871 (1988). Article Google Scholar * Yan, Z., Qin, Y., Wen, W., Hu, X.

S. & Shi, Y. Improving realistic worst-case performance of NVCiM DNN accelerators through training with right-censored Gaussian noise. In _Proc. IEEE/ACM International Conference on

Computer Aided Design (ICCAD)_ 1–9 (IEEE, 2023). * Yan, Z., Hu, X. S. & Shi, Y. Computing-in-memory neural network accelerators for safety-critical systems: can small device variations

be disastrous? In _Proc. 41st IEEE/ACM International Conference on Computer-Aided Design_ 1–9 (ACM, 2022). * Han, S., Mao, H. & Dally, W. J. Deep compression: compressing deep neural

network with pruning, trained quantization and Huffman coding. In _Proc. 4th International Conference on Learning Representations_ (eds Bengio, Y. & LeCun, Y.) (ICLR, 2016). * Yan, Z.,

Qin, Y., Hu, X. S. & Shi, Y. On the viability of using LLMs for SW/HW co-design: an example in designing CiM DNN accelerators. In _Proc. IEEE 36th International System-on-Chip Conference

(SOCC)_ 1–6 (IEEE, 2023). Download references ACKNOWLEDGEMENTS This work is supported in part by the AI Chip Center for Emerging Smart Sytems, by InnoHK funding, Hong Kong Special

Administrative Region, and by the Health Equity Data Lab in the Lucy Family Institute for Data & Society (Grant No. HEDL 23-005). AUTHOR INFORMATION Author notes * These authors

contributed equally: Yuanbo Guo, Zheyu Yan. AUTHORS AND AFFILIATIONS * Department of Computer Science and Engineering, University of Notre Dame, Notre Dame, IN, USA Yuanbo Guo, Zheyu Yan,

Xiaoting Yu, Qingpeng Kong, Dewen Zeng, Yawen Wu, Zhenge Jia & Yiyu Shi * Dos Pueblos High School, Goleta, CA, USA Joy Xie * Canyon Crest Academy, San Diego, CA, USA Kevin Luo Authors *

Yuanbo Guo View author publications You can also search for this author inPubMed Google Scholar * Zheyu Yan View author publications You can also search for this author inPubMed Google

Scholar * Xiaoting Yu View author publications You can also search for this author inPubMed Google Scholar * Qingpeng Kong View author publications You can also search for this author

inPubMed Google Scholar * Joy Xie View author publications You can also search for this author inPubMed Google Scholar * Kevin Luo View author publications You can also search for this

author inPubMed Google Scholar * Dewen Zeng View author publications You can also search for this author inPubMed Google Scholar * Yawen Wu View author publications You can also search for

this author inPubMed Google Scholar * Zhenge Jia View author publications You can also search for this author inPubMed Google Scholar * Yiyu Shi View author publications You can also search

for this author inPubMed Google Scholar CONTRIBUTIONS Y.G. and Z.Y. contributed to all aspects of the project. Y.G. and Z.Y. contributed equally to this project. X.Y. and Q.K. contributed to

data collection and the preliminary experiments. J.X., K.L., D.Z. and Y.W. contributed to the discussion and writing. Z.J. and Y.S. contributed to project planning, development, discussion

and writing. Z.J. and Y.S. jointly supervised the work. CORRESPONDING AUTHORS Correspondence to Zhenge Jia or Yiyu Shi. ETHICS DECLARATIONS COMPETING INTERESTS The authors declare no

competing interests. PEER REVIEW PEER REVIEW INFORMATION _Nature Electronics_ thanks Seyoung Kim, Xue Lin and the other, anonymous, reviewer(s) for their contribution to the peer review of

this work. ADDITIONAL INFORMATION PUBLISHER’S NOTE Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. RIGHTS AND

PERMISSIONS Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s);

author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law. Reprints and permissions ABOUT THIS

ARTICLE CITE THIS ARTICLE Guo, Y., Yan, Z., Yu, X. _et al._ Hardware design and the fairness of a neural network. _Nat Electron_ 7, 714–723 (2024).

https://doi.org/10.1038/s41928-024-01213-0 Download citation * Received: 19 September 2023 * Accepted: 26 June 2024 * Published: 25 July 2024 * Issue Date: August 2024 * DOI:

https://doi.org/10.1038/s41928-024-01213-0 SHARE THIS ARTICLE Anyone you share the following link with will be able to read this content: Get shareable link Sorry, a shareable link is not

currently available for this article. Copy to clipboard Provided by the Springer Nature SharedIt content-sharing initiative